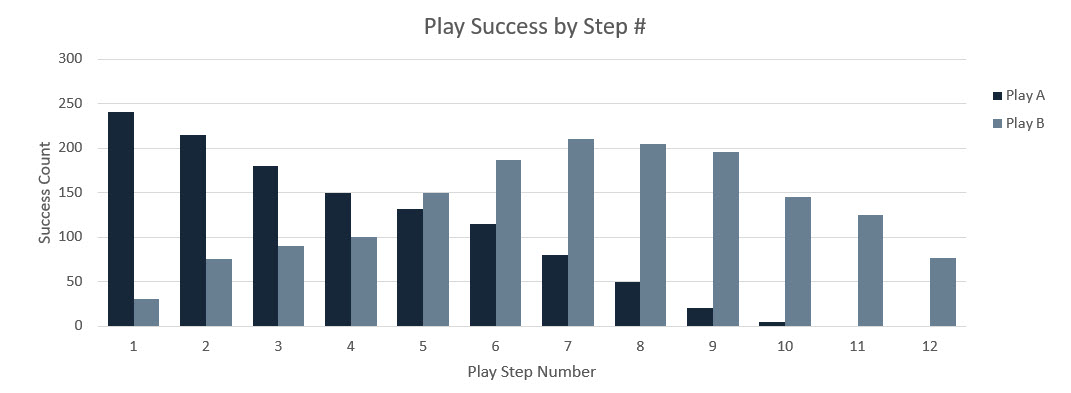

Once you’ve setup the fields, Plays, and Robots set it LIVE! You may need to give Reps some notification or training on the test that is being ran, but for the most part, their daily process won’t change. When a Playbooks user achieves the goal of a Play, they need to mark the Play as successful (easy to-click process). This will flag the specific activity in Playbooks, then Play Committees, managers, and operation teams can compare the success for Play A to Play B to determine which is most effective. If the particular A/B Test you’re running involves emails, you’ll need to look at email outcome reports to gauge success.

Measuring A/B Test Success

Using CRM reports, compare the 2 test Plays or email templates based on the outcome of the Play or email template. The Play Outcome Reports article has details on how to create or modify reports in CRM or take the Playbooks + Salesforce Reporting course.

Email based A/B tests require a different measure of success. When we send email to a prospect, ideally they respond and we call that success the Response Rate. If they respond, we can still measure success with link clicks or email opens. Email Outcome Reports highlight link clicks, email opens, and email replies, which are all signals of customer engagement. This can provide insight into the effectiveness of different content strategies, and help teams optimize the likelihood of email engagement with prospects and customers.

Email based A/B tests require a different measure of success. When we send email to a prospect, ideally they respond and we call that success the Response Rate. If they respond, we can still measure success with link clicks or email opens. Email Outcome Reports highlight link clicks, email opens, and email replies, which are all signals of customer engagement. This can provide insight into the effectiveness of different content strategies, and help teams optimize the likelihood of email engagement with prospects and customers.

Testing Principles and Best Practices

A/B Testing can be a powerful tool for refining engagement strategy, but it must be approached properly to qualify that success measurements were properly attained. We’ve noted that you should only change one thing between the two Plays, but what other considerations will have an impact on the measurement of success? Generally speaking, keep tests as simple as possible by only comparing two things that are directly related to each other (ex: two Plays for the same campaign).

Sample size is also a very important factor in A/B testing. Don’t jump the gun and declare victory after 10 phone calls or emails. To determine how large of a sample your test should encompass, consider how much total exposure your Play or email will cover. Will the Play be active for a few months or in perpetuity? Is the talk track specific for a subset of your customers (i.e. industry, title) or all customers? Other things to balance when determining sample size are risk tolerance, cost, and timing. How much time do you have to test an invite email before the actual event? And also think about the prospect/customer’s timetable; is it the end of the month/quarter? There isn’t one right answer for how large your sample size should be. But consulting with your Play Committee, Sales Operations team, and internal analytics team (if you have one) is advised.

Statistical significance should also be considered in high impact testing and will help you to understand how much probable chance could be swaying your results (this is also where an internal analytics team would be helpful). Statistical significance is expressed as a “p-value”; the lower the p-value, the less likely your results are swayed by chance. There are many resources online to help you learn more about statistical significance, but just note that random chance could be swaying your results one way or another.

However, for a simple, low impact A/B test, don’t get stuck in analysis paralysis. Take advantage of Robot automation and CRM reporting to create quick tests and stay agile.